Chapter 1: The Explanatory Filter

"For his invisible attributes, namely, his eternal power and divine nature, have been clearly perceived, ever since the creation of the world, in the things that have been made. So they are without excuse."

— Romans 1:20, ESV

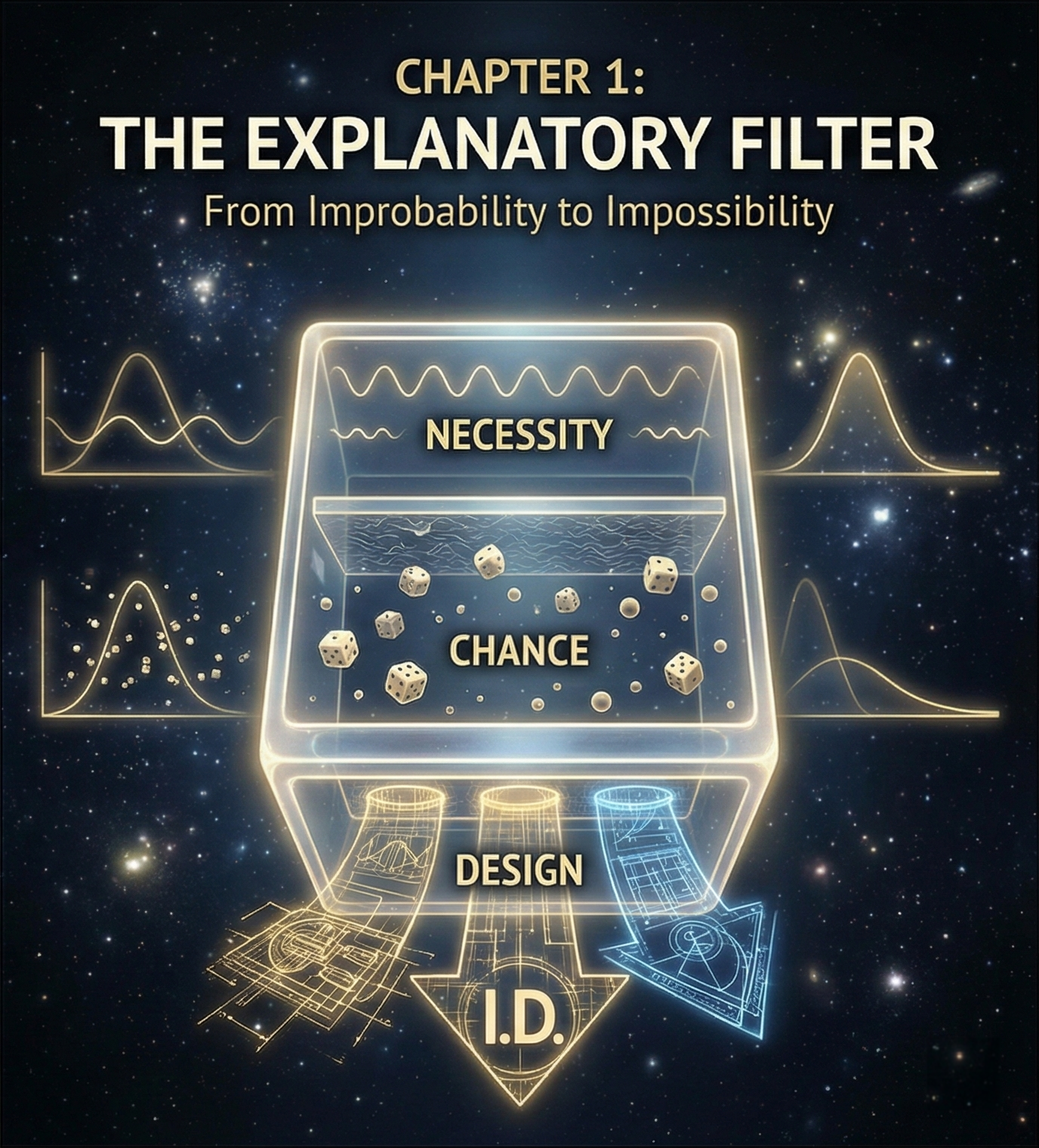

William Dembski's explanatory filter operates as a tripartite logical framework: contingency, complexity, specification. The filter systematically eliminates competing causal modalities until only design remains as a viable explanatory hypothesis. This is not intuition masquerading as methodology—it is rigorous inference to the best explanation applied to causal attribution.

Consider the mathematical impossibility space occupied by functional proteins. Douglas Axe's experimental work demonstrates that the probability of randomly assembling a functional 150-amino acid protein approaches 1 in 10^164—a number so vanishingly small that it exceeds the probabilistic resources of the observable universe. Yet proteins are not merely improbable arrangements; they are specifically complex arrangements that conform to independent functional requirements.

This distinction proves crucial. The explanatory filter first asks: Is the event contingent? Could it have been otherwise? Random protein folding is contingent—any of 20^150 possible sequences could theoretically emerge. Second: Is it complex? Does its probability fall below the universal probability bound of 10^-150? Functional proteins obliterate this threshold. Third: Does it conform to an independent pattern? Protein function requires specific three-dimensional conformations that facilitate catalytic activity—patterns specifiable independently of the sequence itself.

The convergence of improbability and specification creates what Dembski terms 'complex specified information' (CSI). CSI functions as a reliable indicator of intelligent causation because it exhibits the precise characteristics we observe in paradigm cases of design while remaining absent from the outputs of purely natural processes.

But CSI analysis extends far beyond molecular biology. The anthropic fine-tuning of cosmological constants represents perhaps the most striking example of CSI at universal scales. The cosmological constant itself requires fine-tuning to within 1 part in 10^120 to permit the formation of galaxies and stars. The strong nuclear force demands precision to 1% for stellar nucleosynthesis. The ratio of electromagnetic to gravitational forces must fall within extremely narrow parameters for planetary systems to remain stable.

Each constant individually exhibits both complexity (extreme improbability) and specification (conformity to the independent requirement for life-permitting conditions). The simultaneous fine-tuning of multiple independent parameters compounds these probabilities multiplicatively, generating CSI values that dwarf even the most generous estimates of probabilistic resources.

Critics invoke multiverse hypotheses to dissolve the inference to design, but such responses merely relocate the problem without resolving it. Any multiverse-generating mechanism capable of producing the requisite diversity would itself require fine-tuning to generate universes rather than chaos. The inflation field, string theory landscape dynamics, or quantum branching mechanisms all presuppose specific laws and initial conditions that themselves exhibit CSI.

The explanatory filter thus reveals design inference as an eliminative process rather than an argument from ignorance. When chance and necessity prove causally inadequate to account for CSI, design emerges not as a gap-filling explanation but as the most empirically adequate hypothesis given the constraints of our experience with causal powers.

Design Inference vs. Argument from Ignorance

The design inference is sometimes dismissed as a "God of the gaps" argument—invoking divine action wherever current science lacks explanation. This mischaracterizes the argument entirely.

The design inference does not argue: "We don't know how X arose, therefore God."

Rather: "X exhibits the positive, characteristic features that in all other contexts reliably indicate intelligent causation—specified complexity, semiotic structure, irreducible functional integration—therefore the same type of cause is operative here."

Consider the parallel reasoning:

- Archaeologists conclude stone tools are designed not from ignorance of geological processes, but from positive knowledge of what agency produces versus what natural forces produce

- Cryptographers identify encrypted messages not from ignorance of random noise, but from recognizing signatures that distinguish information from entropy

- SETI researchers would recognize alien intelligence not from ignorance of astrophysics, but from identifying the hallmarks of designed communication

The design inference follows identical logic: it identifies positive signatures of intelligent causation based on our extensive experience with how different types of causes operate.

Moreover, the argument's strength lies not in a single "gap" but in convergent evidence—independent lines of investigation from molecular biology, information theory, cosmology, and consciousness studies all pointing toward the same conclusion. When archaeologists find pottery shards, written tablets, architectural foundations, and metallurgical artifacts at the same site, they don't conclude "we found four gaps in our knowledge." They recognize converging evidence for a civilization. Similarly, when specified complexity appears across independent domains—genetic code, protein folding, cosmological constants, rational consciousness—we observe consilience, not gap-filling.

This methodology transforms questions of ultimate origin from metaphysical speculation into empirical investigation. The universe exhibits precisely the informational signature we would expect if it were the product of transcendent intelligence—and precisely the signature we would not expect if it were the output of purely material processes operating without guidance or goal.