Chapter 2: Kolmogorov Complexity

"The LORD by wisdom founded the earth; by understanding he established the heavens;"

— Proverbs 3:19, ESV

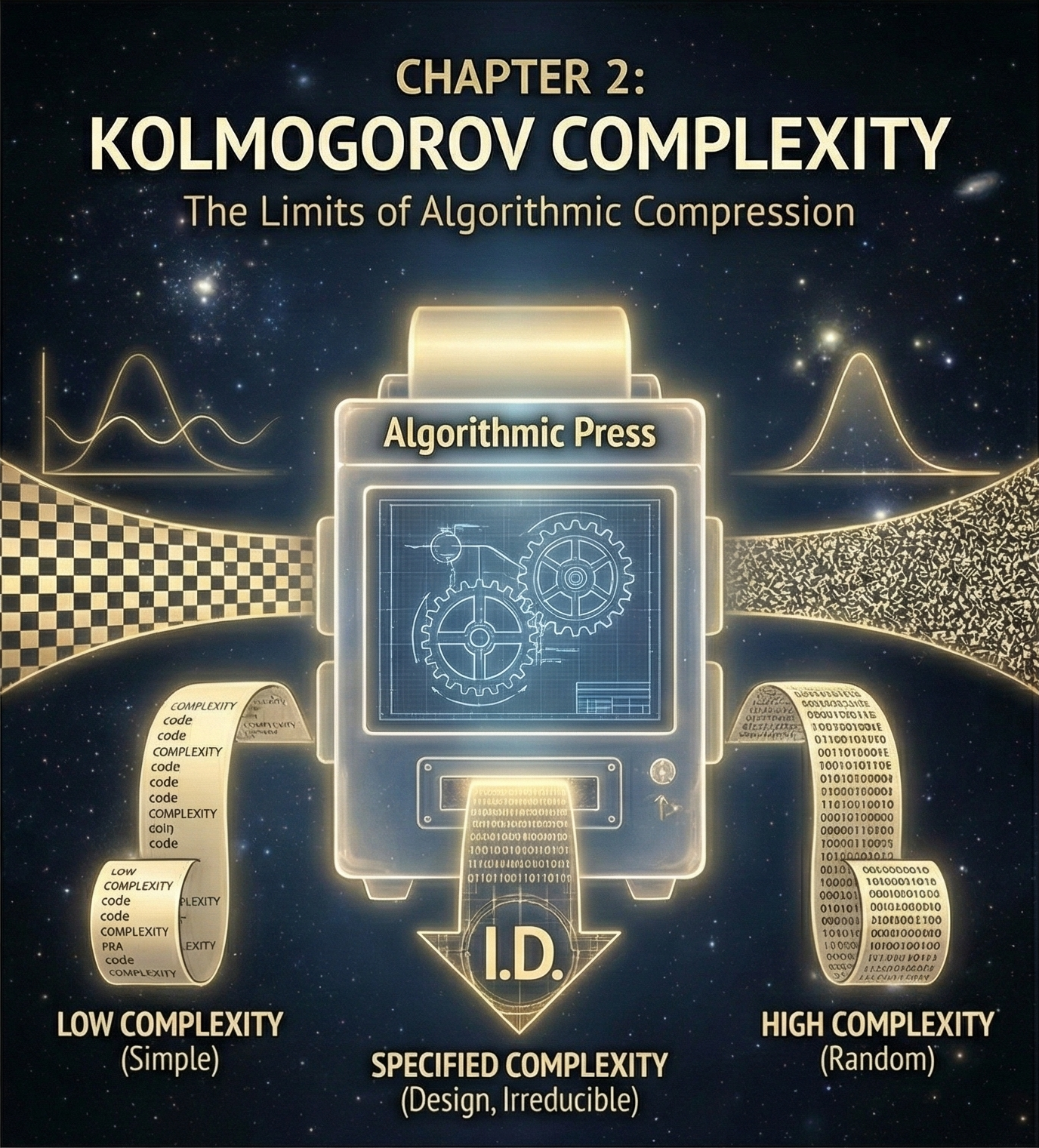

Kolmogorov complexity provides a mathematically rigorous foundation for distinguishing between different types of information-bearing structures. The Kolmogorov complexity K(x) of a string x equals the length of the shortest program that outputs x on a universal Turing machine. This metric captures our intuitive sense that some patterns require more 'explanation' than others—but with mathematical precision.

Consider three binary strings of length 100:

String A: 0101010101... (alternating pattern)

String B: 1100010101... (pseudorandom sequence)

String C: The first 100 digits of π in binary

String A has low Kolmogorov complexity—a short program can generate the entire sequence through simple iteration. String B has high Kolmogorov complexity if truly random—no program shorter than the string itself can reproduce it. But String C presents the crucial case: it has low Kolmogorov complexity (the algorithm for π is compact) yet appears random to cursory inspection.

This distinction illuminates why genetic code and protein structures resist naturalistic explanation. DNA sequences exhibit neither the simple repetition of String A nor the incompressible randomness of String B. Instead, they display the signature of String C: apparent randomness masking deep algorithmic structure.

The genetic code's optimization properties exemplify this pattern. Francis Crick initially expected a 'frozen accident'—arbitrary codon assignments locked in by historical contingency. Instead, the code exhibits sophisticated error-minimization properties. Hydrophobic amino acids cluster together in codon space, while chemically similar amino acids remain adjacent. Single nucleotide substitutions typically produce amino acids with similar properties, minimizing the phenotypic impact of transcription errors.

Stephen Freeland's comparative analysis demonstrates that among 10^20 possible genetic codes, naturally occurring codes rank in the top 0.1% for error tolerance. This optimization requires global coordination across the entire translation system—precisely the type of algorithmic sophistication that characterizes designed systems rather than evolved ones.

Protein folding represents an even more striking example. Cyrus Levinthal's paradox demonstrates that random search through conformational space would require times vastly exceeding the age of the universe. Yet proteins fold reliably in milliseconds through precisely orchestrated folding pathways. The primary sequence contains compressed instructions for three-dimensional architecture—a classic example of high algorithmic information density.

Modern proteomics reveals additional layers of complexity. Protein function depends not merely on correct folding but on precise subcellular localization, temporal expression patterns, post-translational modifications, and interaction networks. Each functional protein exists at the intersection of multiple independent constraints, generating CSI through the multiplication of improbabilities.

The RNA world hypothesis attempts to circumvent these problems by proposing self-replicating ribozymes as evolutionary precursors. But Harold Morowitz's thermodynamic analysis reveals that even the simplest self-replicating system requires coordination among hundreds of chemical reactions. Relative to the probabilistic resources available in our observable universe—considering plausible numbers of molecular interactions over relevant timescales—the spontaneous organization of such systems is overwhelmingly disfavored under models restricted to unguided chemical processes. This doesn't constitute a formal impossibility proof, but it does mean that if we take our background physical and chemical models seriously, the appearance of such organized complexity serves as powerful evidence for an additional, non-chance explanatory principle.

Algorithmic information theory thus provides objective criteria for distinguishing designed from undesigned complexity. High Kolmogorov complexity combined with functional specification generates an informational signature that reliably indicates intelligent causation across all domains of our experience—from computer programs to architectural blueprints to musical compositions.

The molecular machinery of life exhibits precisely this signature: algorithmic compression married to functional specification, generating information densities that exceed the capacity of undirected natural processes while remaining well within the demonstrated capabilities of intelligent agency.

The Rational Structure Objection

Critics sometimes argue: "We observe rationality emerging from non-rational processes—stars produce elements, chemistry produces biology, neurons produce thought. So rational order doesn't need a rational ground."

But this objection misses what's actually happening. When we trace the emergence chain:

- Rationality emerges from computation

- Computation emerges from chemistry

- Chemistry emerges from quantum physics

- Quantum physics operates according to precise mathematical laws

At no point do we witness chaos producing order. We observe rationally structured physical processes producing rationally comprehending minds. The supposedly "non-rational" base layer isn't truly non-rational—it's governed by elegant mathematical symmetries, stable causal relations, and law-like regularities.

Evolution works precisely because fitness landscapes can be mathematically defined. Neural computation works because physics supports stable information mapping. The emergence of rationality succeeds only within an already-rational framework.

This raises the deeper question naturalism cannot answer: Why is the base-level structure rational at all? Why do mathematical laws govern physical processes? Why is the cosmos mathematically intelligible rather than chaotic?

The naturalist must accept as brute fact that reality just happens to be rationally structured in precisely the way required for rational minds to emerge. The theist explains this: rational structure flows from a Rational Ground. When we trace the emergence of complexity, we don't find chaos at the foundation—we find mathematical elegance, stable laws, and algorithmic precision. The question is whether this foundation is itself explained, or whether it remains an inexplicable cosmic coincidence.